子代理、技能与图像生成

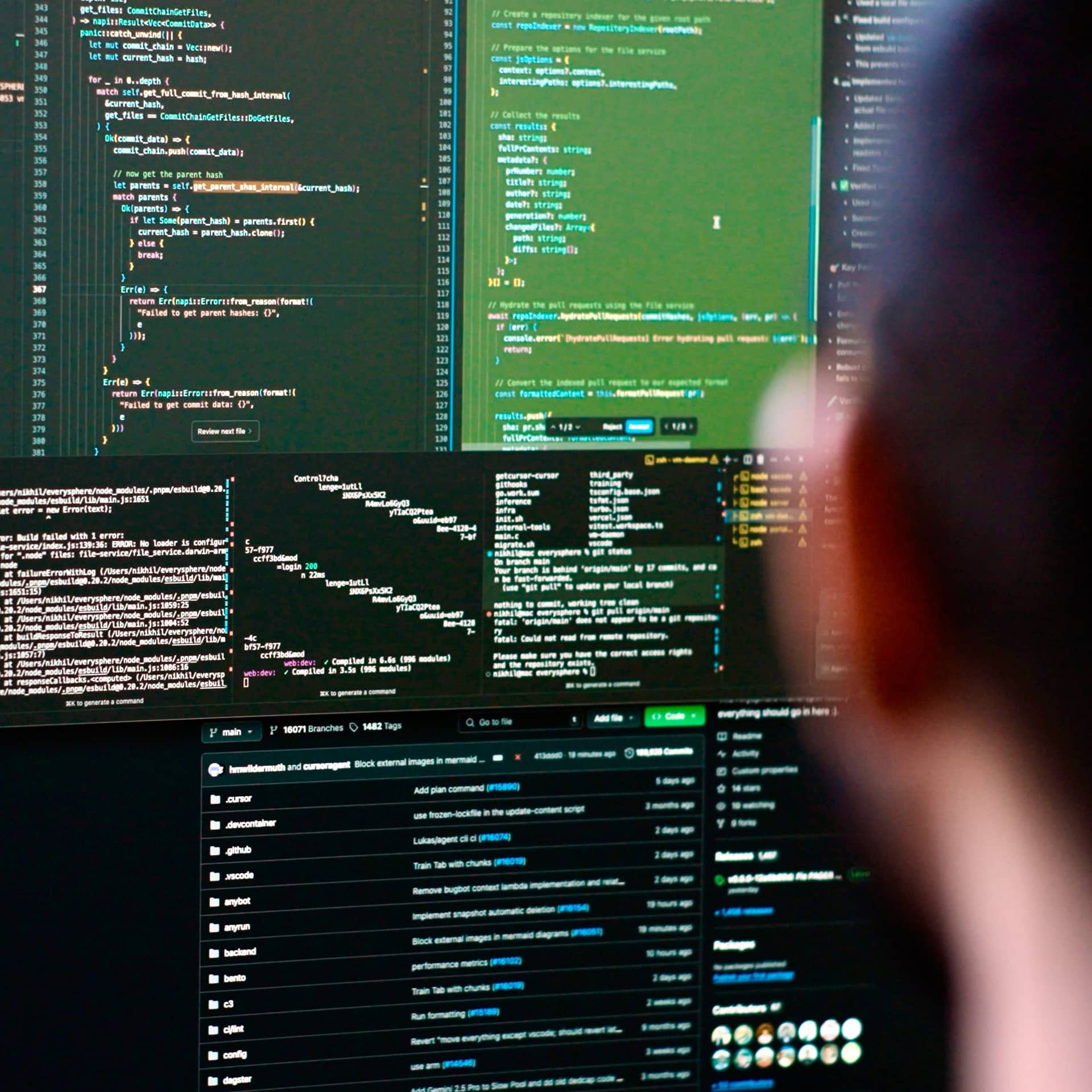

Cursor 旨在让您的工作效率达到非凡水平,是使用 AI 编写代码的最佳方式。

每天被构建世界级软件的团队所信赖

Agent 将想法转化为代码

神奇般精准的自动补全

在每个工具中,每一步都有

构建软件的新方式。

“从一批到另一批,采用率从个位数飙升到超过80%,简直是天壤之别。它像野火一样迅速传播开来,所有最优秀的开发者都在使用Cursor。”

“我最喜欢的企业级 AI 服务是 Cursor。我们所有的工程师,大约 40,000 人,现在都得到了 AI 的辅助,我们的生产力得到了显著提升。”

“最优秀的大语言模型应用都配备了自主性滑块:由你控制赋予 AI 多大的独立性。在 Cursor 中,你可以使用 Tab 补全、Cmd+K 进行针对性编辑,或者让它以完全自主的智能体模式全力运行。”

“Cursor 在 Stripe 员工中迅速从数百人增长到数千名极其热情的用户。我们在研发和软件开发上的投入超过任何其他项目,提高这一过程的效率将带来显著的经济效益。”

“目前我付费使用的最有用的 AI 工具,毫无疑问是 Cursor。它速度快,能在你需要的时候和位置进行自动补全,正确处理括号,键盘快捷键设计合理,支持自带模型……一切都设计得非常完善。”

“成为程序员无疑变得越来越有趣了。我们目前仅处于可能性的 1%,而正是在像 Cursor 这样的交互式体验中,GPT-5 等模型才能展现出最耀眼的光芒。”

Cursor 是一个应用研究团队,专注于构建软件开发的未来。

最新亮点

Towards self-driving codebases

We're making a part of our multi-agent research harness available to try today in preview.

Salesforce ships higher-quality code across 20,000 developers with Cursor

Over 90% of developers at Salesforce now use Cursor, driving double-digit improvements in cycle time, PR velocity, and code quality.

Best practices for coding with agents

A comprehensive guide to working with coding agents, from starting with plans to managing context, customizing workflows, and reviewing code.